Graph patterns from OCL, a performance evaluation

######Tags: EMF-IncQuery publication

Model-driven tools use model queries for many purposes, including validation of well-formedness rules and specification of derived features. The majority of declarative model query corpus available in industry appears to use the OCL language. Graph pattern based queries, however, would have a number of advantages due to their more abstract specification, such as performance improvements through advanced query evaluation techniques. As query performance can be a key issue with large models, evaluating graph patterns instead of OCL queries could be useful in practice. The measurements presented here give justification to automatically mapping OCL to equivalent graph patterns by showing that one can deliver efficient, incremental query evaluation for a subset of OCL expressions.

Author: Gábor Bergmann

This benchmark page was created to supplement the article: Translating OCL to Graph Patterns, accepted into the programme of MODELS'14.

###Overview of the approach

Since the majority of declarative model query corpus available in industry appears to be OCL, the above mentioned benefits can only be reaped by translating OCL queries into graph patterns. This is not always possible, as OCL is more expressive. Nevertheless, by extending prior work, an automated mapping OCL2IQ is presented in the paper that transforms a large sublanguage of OCL expressions to equivalent graph patterns in the dialect of EMF-IncQuery.

The justification of the proposed mapping is that one can deliver efficient, incremental query evaluation for a subset of OCL expressions by transforming them to graph patterns of equivalent semantics, and applying EMF-IncQuery. To demonstrate this, a subset of an existing performance benchmark for well-formedness (invariant) constraint checking was applied.

The Train Benchmark defines a number of well-formedness constraints (of which only SignalNeighbor is used here) in a custom metamodel, and measures the constraint checking performance of various model query tools as they process automatically generated instance models of various sizes conforming to the metamodel. The goal is to provide near instantaneous feedback on constraint violations as the (simulated) user is editing a large model. The workload and measured performance indicators involve: (phase 1) reading the model, (phase 2) checking it for any inconsistencies as defined by the well-formedness constraint, (phase 3) simulating a transformation / manual editing of the model that performs a predefined sequence of modifications, and (phase 4) checking the updated model as well for inconsistencies. For fair comparison of stateless tools against incremental ones, the most relevant performance indicators are phase 1+2 ("Batch Validation") execution time and phase 3+4 ("Continuous Validation") execution time (and of course the memory footprint).

###Translating OCL to Graph Patterns

The procedure of mapping OCL expressions to graph patterns has been presented in the MODELS'14 paper Translating OCL to Graph Patterns. Due to restrictions on paper length, not all OCL elements could be discussed in the paper; complete treatment of the OCL Standard Library is available in this extended on-line version:

-

Gábor Bergmann: Translating OCL to Graph Patterns - EXTENDED VERSION

- Extended version of the MODELS 2014 paper, containing an appendix with complete coverage of the built-in OCL operations (OCL Standard Library) defined in the OMG OCL Standard 2.4.

###Case study

The following case study description reuses content from this Train Benchmark site. The benchmark uses a domain-specific model of a railway system that contains most typical class diagram constructs. This metamodel will be instantiated, and concepts of the metamodel can be used in queries.

Metamodel

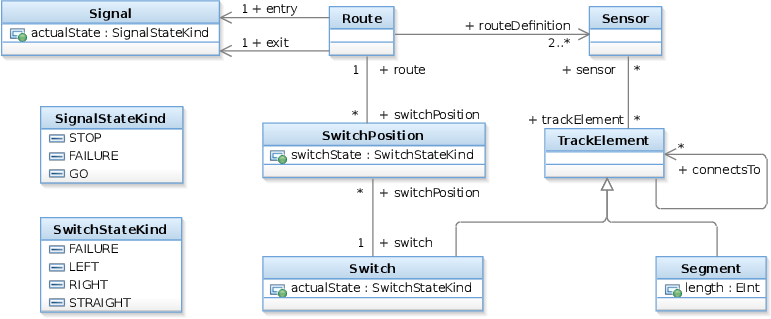

The metamodel is defined as an EMF metamodel:

A train route can be defined by a set of sensors between two neighboring signals. The state of a signal can be stop (when it is red), go (when it is green) or failure. Sensors are associated with track elements, which can be a track segment or a switch. The status of a switch can be left, right, straight or failure. A route can have associated switch positions, which describe the required state of a switch belonging to the route. Different route definitions can specify different states for a specific switch.

Constraints

Several high-level requirements can be specified that must hold for any valid instance models of a train system. The queries of constraints return the set of constraint violating elements. The current benchmark evaluation is restricted in scope to SignalNeighbor, which is the most complex of Train Benchmark constraints.

| Name | SignalNeighbor |

| Textual description | A route is incorrect, if it has a signal, and a sensor connected to another sensor by two track element, but there is no other route that connects the same signal and the other sensor. This pattern checks for the absence of cycles, so the efficiency of join is tested. One-way navigable references are also present in the constraint, so the efficient evaluation of these are also tested. |

| Graphical graph pattern |  |

| Graph pattern | pattern signalNeighbor(R1) = { find exitSignalSensor(SigA, R1, Sen1A); find connectingSensors(Sen1A, Sen2A); find rDefinition(R3A, Sen2A); R3A != R1; neg find entrySignalSensor(SigA, _R2A, Sen2A); } pattern exitSignalSensor(Sig, R1, Sen1) = { find exitSignal(R1, Sig); find rDefinition(R1, Sen1); } pattern entrySignalSensor(Sig, R2, Sen2) = { find entrySignal(R2, Sig); find rDefinition(R2, Sen2); } pattern entrySignal(R, Sig) = { Route(R); Signal(Sig); Route.Route_entry(R, Sig); } pattern exitSignal(R, Sig) = |

| OCL query | context Route: oclIsKindOf(Route) and self.Route_exit->exists(signal | self.Route_routeDefinition.Sensor_trackElement.TrackElement_connectsTo.TrackElement_sensor->exists(sen2 | Route.allInstances()->exists(route2X | (route2X <> self) and route2X.Route_routeDefinition->includes(sen2) ) and not ( Route.allInstances()->exists(route2 | route2.Route_routeDefinition->includes(sen2) and route2.Route_entry = signal ) ) ) ) |

Instance model generation

The model generator instantiates the metamodel and generates EMF instance models, with size ranging from a few thousand elements (nodes and edges) up to approx. 14*109. The generator randomly introduced faulty element configurations into the model (with low percent). The distribution of nodes belonging to a type and outgoing degree of typed edges are depicted in the following diagram:

The current measurements were restricted to four instance model sizes, with respectively 113K, 213K, 435K and 867K model elements. All tools were evaluated on at least two different model sizes, allowing for extrapolation of scalability.

###Benchmark description

The benchmark simulates a typical model validation scenario in a model-driven system development process where small and local modifications are applied to large models (i.e. the knowledge base changes), and well-formedness constraints are continuously re-evaluated to check the correctness of the result model and highlight design flaws for engineers. A benchmark scenario is built up from well defined phases.

Phases:

- Phase 1 - Read: In the first phase, the previously generated instance model is loaded from hard drive to memory. This includes parsing of the input, as well as initializing data structures of the tool. The latter can consume minimal time for a tool that performs only local search, but for incremental tools indexes or in-memory caches are initialized.

- Phase 2/4 - Check/re-check: In the check phases the instance model is queried for invalid elements. This can be as simple as reading the results from cache (in case of incremental techniques), or the model can be traversed based on some index. Theoretically, cache or index building can be deferred from the read phase to the check phase, but it depends on the actual tool implementation. At the end of this phase, erroneous objects must be available in a list.

- Phase 3 - Modify: In the first part of this phase, the elements that will be modified are selected. (This preparation is implemented independently of the query technology, and is thus not included in the measurements.). What is measured is the time of the second part, which consists only of edit operations on the preselected instance model elements, such as modifying attribute values, or deleting relations.

During the benchmark, the time of each phase is measured using nanotime precision which does not mean nanosecond accuracy, this is why the highest resolution is 1 ms in the presented diagrams.

###Model Manipulation Workload

The performance of incremental techniques may depend on what kind of change workload is performed in phase 3.

In the UserScenario, a developer is assumed to sit in front of a model editor. The user first reads the model from file. Next a check is performed, reporting low number of constraint violating elements (0.06% of model elements for the RouteSensor case), so it can be understood and resolved by a human using the editor. During the modification the user always performs 10 random edits (fixed low constant) which increases the number of erroneous elements. These edits modifies only some elements of the model, and does not add or remove modules containing multiple instance model elements. In particular, every edit step (phase 3) sets the entry reference of a randomly preselected Route to null. The workflow actually executes phase 3+4 a total of 10 times; the reported measurement values are the average time of one repetition (edit + 1 re-check).

The ModelXFormScenario, on the other hand, attempts to simulate a transformation that applies an automated (albeit trivial) fix to some detected constraint violations. In particular, the edit step (phase 3) sets the exit reference of 10 randomly preselected violating Routes to null, thereby satisfying these instances of the constraint. The edit is immediatelly followed by the re-check.

Tools

The run-time performance of the following solutions were compared:

- Java a naive Java implementation of the constraint check, as a hypothetical programmer would quickly implement it, without any special effort to improve performance.

- EIQ hand-written graph patterns evaluated incrementally by EMF-IncQuery.

- OCL the OCL interpreter of Eclipse, as it evaluates the OCL representation of the constraint check.

- OCL-CG is Java code generated from the same OCL expression by Eclipse OCL.

- OCL-IA the OCL Impact Analyzer toolkit of Eclipse OCL, as it incrementally evaluates the same OCL expression.

- OCL2IQ graph patterns automatically derived from the same OCL expression by a prototype partial implementation of the proposed mapping, likewise interpreted incrementally by EMF-IncQuery (new contribution extending previous Train Benchmark reports).

Environment

Dell Latitude E5420 Laptop, Intel Core i5-2430M @ 2.4Ghz CPU, 16GB of DDR3-1066/1333 RAM, Samsung SSD 830; Eclipse Kepler on Java SE 1.7.0_05-b06 (with 2G maximum heap size) on Windows 7 x64; Eclipse OCL pre-release version 3.4.0.v20140124-1452, EMF-IncQuery 0.8.0 (nightly at 2014-03-05).

###Results

UserScenario measurements

| UserScenario | Performance on instance models | ||||

|---|---|---|---|---|---|

| Model size (#of nodes+edges) | 113K | 213K | 435K | 867K | |

| Java | Batch Validation Time [ms] | 39 335 | 169 867 | ||

| Continuous Validation Time [ms] | 36 248 | 167 891 | |||

| Memory Footprint [kB] | 11 558 | 14 009 | |||

| OCL | Batch Validation Time [ms] | 11 116 | 36 157 | ||

| Continuous Validation Time [ms] | 7 050 | 32 237 | |||

| Memory Footprint [kB] | 9 129 | 15 304 | |||

| OCL-CG | Batch Validation Time [ms] | 33 160 | 126 461 | ||

| Continuous Validation Time [ms] | 30 204 | 126 723 | |||

| Memory Footprint [kB] | 12 148 | 17 755 | |||

| OCL-IA | Batch Validation Time [ms] | 12 578 | 36 444 | ||

| Continuous Validation Time [ms] | 58 675 | 331 523 | |||

| Memory Footprint [kB] | 15 864 | 26 073 | |||

| EMF-IncQuery | Batch Validation Time [ms] | 4 635 | 6 142 | 4 491 | 11 466 |

| Continuous Validation Time [ms] | 2 | 2 | 1 | 1 | |

| Memory Footprint [kB] | 55 993 |

108 435 |

196 051 | 392 522 | |

| OCL2IQ | Batch Validation Time [ms] | 4 904 | 6 205 | 4 373 | 10 485 |

| Continuous Validation Time [ms] | 2 | 1 | 1 | 1 | |

| Memory Footprint [kB] | 58 193 | 118 319 | 207 120 | 412 347 | |

ModelXFormScenario measurements

| ModelXFormScenario | Performance on instance models | ||||

|---|---|---|---|---|---|

| Model size (#of nodes+edges) | 113K | 213K | 435K | 867K | |

| Java | Batch Validation Time [ms] | 37 916 | 135 652 | ||

| Continuous Validation Time [ms] | 35 516 | 134 468 | |||

| Memory Footprint [kB] | 12 031 | 16 672 | |||

| OCL | Batch Validation Time [ms] | 12 973 | 33 164 | ||

| Continuous Validation Time [ms] | 6 391 | 29 199 | |||

| Memory Footprint [kB] | 9 087 | 14 020 | |||

| OCL-CG | Batch Validation Time [ms] | 33 659 | 114 238 | ||

| Continuous Validation Time [ms] | 29 031 | 100 660 | |||

| Memory Footprint [kB] | 12 110 | 17 039 | |||

| OCL-IA | Batch Validation Time [ms] | 13 111 | 36 487 | 145 875 | 741 238 |

| Continuous Validation Time [ms] | 64 | 64 | 48 | 61 | |

| Memory Footprint [kB] | 12 820 | 20 108 | 36 127 | 67 042 | |

| EMF-IncQuery | Batch Validation Time [ms] | 5 439 | 1 949 | 4 377 | 11 772 |

| Continuous Validation Time [ms] | 6 | 5 | 4 | 4 | |

| Memory Footprint [kB] | 55 251 | 102 274 | 198 060 | 386 982 | |

| OCL2IQ | Batch Validation Time [ms] | 5 268 | 2 570 | 3 562 | 9 197 |

| Continuous Validation Time [ms] | 22 | 21 | 18 | 12 | |

| Memory Footprint [kB] | 55 684 | 101 024 | 201 437 | 399 356 | |

Analysis

The incremental strategy of EMF-IncQuery performs extremely well in the "Continuous Validation" workload, delivering practically immediate feedback after model manipulation, at the cost of increased memory footprint. Furthermore, comparison against benchmark instances with different model sizes confirms the theoretical result that this "Continuous Validation" time is practically independent of the size of unchanging parts of the model;EMF-IncQuery memory consumption and "Batch Validation" time was found to scale approximately proportionally to model size, while OCL execution times are between a linear and quadratic proportion to model size. Finally, the graph queries automatically generated using the proposed transformation (OCL2IQ) perform similarly to manually written EMF-IncQuery code (EIQ), outperforming pure Java as well as stateless or incremental OCL-based approaches.

The advantage of graph patterns at "Batch Validation" time likely stems from automatic query planning, while "Continuous Validation" times are a consequence of the deep caching of the Rete incremental evaluation strategy; these are two of the many potential benefits of the proposed approach. Thus translating OCL code to graph patterns is justified in this scenario.

The "Continuous Validation'' times for OCL-IA are significantly worse in case of the UserScenario workload than with the alternative model manipulation workload ModelXFormScenario. The reason is that ModelXFormScenario clears the exit reference, making OCL-IA re-evaluation quick after the change, leading to efficient incrementality; while UserScenario clears the entry reference, which makes re-evaluation expensive. EIQ and OCL2IQ are much less sensitive to this option, in line with theoretical predictions.

Remarks and Threats to Validity

Diverging from previous Train Benchmark experiments at the suggestion of Eclipse OCL leader Ed Willink, OCL evaluation was not invoked by substituting each model element as self, but only on a prefiltered list of instances of the context type of the constraint.

Note that the OCL query was produced by non-experts. Hand-optimized queries may perform better. However, the OCL2IQ approach received the same unoptimized query as input, so the comparison is fair.

The benchmark scenario was deliberately chosen as one where incremental approaches have potential advantages, and the selected query was complex to increase the role of automatic query optimization. Therefore the results do not show universal superiority of one tool over another, merely produce evidence that the proposed approach has legitimate use cases.

###Reproduction

OCL2IQ transformation

A prototype partial implementation of the proposed transformation is available as an Eclipse plug-in project at github, with examples of usage here. Important notes: *Currently only a subset of the transformation is actually implemented, sufficient for some tests as well as the benchmark presented here. *In most cases, the transformation will indicate if the input OCL expression is not supported. This check, however, is currently incomplete; there are cases where the tool produces an output despite it not being a proper translation of the input. In particular, the set semantics of collection operations are not currently checked. *In addition to the main graph pattern corresponding to the input OCL expression and auxiliary patterns called from it, the tool may output some unused auxiliary patterns due to sloppy software engineering. These superflous patterns are not transitively reachable from the main output patterns, and thus do not influence evaluation results or query performance. In the future, additional effort is required to tidy up the transformation code, so that no such auxiliary patterns are generated needlessly.

Downloadable measurement setup

The attached TrainBenchmark zip archive contains Eclipse project exports of the case study metamodel and instance models, benchmark implementations in the various tools, and the benchmark driver code. The code was largely the work of Benedek Izsó (except for the addition of OCL2IQ content), reused for this paper. The additional download contains a replacement for the hu.bme.mit.Train.constraintCheck.incQuery.0.6 project, in order to run the OCL2IQ generated graph patterns in EMF-IncQuery instead of the original hand-written ones (EIQ), which was the original purpose of this project in the Train Benchmark.

###Acknowledgement

This work was partially supported by the European Union and the State of Hungary, co-financed by the European Social Fund in the framework of TÁMOP 4.2.4. A/-11-1-2012-0001 ‘National Excellence Program’, and by the CERTIMOT (ERC_HU-09-01-2010-0003) and EU FP7 MONDO (ICT-2013.1.2) projects.

Attachment: